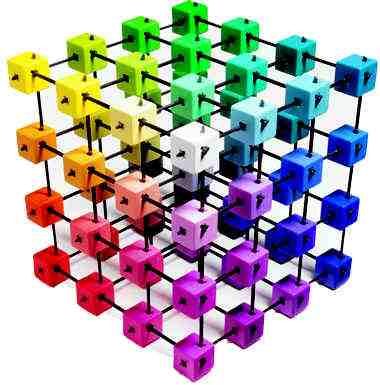

The Motivated Automatic-Deliberative architecture

This biologically inspired architecture is based on the ideas of the modern

psychology expressed by Shiffrin and Schneider, so it

considers two levels, the automatic and the deliberative levels.

In AD architecture, both levels are formed by skills,

which endow the robot with different sensory and motor capacities,

and process information.

In our AD architecture implementation, deliberative skills are based on these activities and the authors consider that only one deliberative skill can be activated at once.

In the AD implementation, the automatic level is mainly formed by skills which are related with sensors an actuators. Automatic skills can be performed in a parallel way and they can be merged in order to achieve more complex skills.

The automatic level is linked to modules that communicate with hardware, sensors, and motors. At the deliberative level, reasoning processes are placed. The communication between both levels is bidirectional and it is carried out by the Short-Term Memory and events.

Events are the mechanisms used by the architecture for

working in a cooperative way. An event is an asynchronous

signal for coordinating processes by being emitted and captured.

The design is accomplished by the implementation of

the publisher/subscriber design pattern so that an element

that generates events does not know whether these events are

received and processed by others or not.

The Short-Term Memory is a memory area which can be

accessed by different processes, where the most important

data is stored. Different data types can be distributed and are

available to all elements of the AD architecture. The current

and the previous value, as well as the date of the data capture,

are stored. Therefore, when writing new data, the previous

data is not eliminated, it is stored as a previous version. The

Short-Term Memory allows to register and to eliminate data

structures, reading and writing particular data, and several

skills can share the same data. It is based on the blackboard

pattern.

On the other hand, the Long-Term memory has been implemented

as a data base and files which contain information

such as data about the world, the skills, and grammars for the

automatic speech recognition module.

The essential component in the AD

architecture is the skill and it is located in both levels. In

terms of software engineering, a skill is a class that hide data

and processes that describes the global behavior of a robot

task or action. The core of a skill is the control loop which

could be running (skill is activated) or not (skill is blocked).

Skills can be activated by other skills, by a sequencer, or

by the decision making system. They can give data or events

back to the activating element or other skills interested in them.

Skills are characterized by:

- They have three states: ready (just instantiated), activated

(running the control loop), and locked (not running the

control loop). - Three working modes: continuous, periodic, and by

events. - Each skill is a process. Communication among processes

is achieved by Short-Term Memory and events. - A skill represents one or more tasks or a combination of

several skills. - Each skill has to be subscribed at least to an event and

it has to define its behavior when the event arises.

The AD architecture allows the generation of complex skills

from atomic skills (indivisible skills). Moreover, a skill can be

used by different complex skills, and this allows the definition

of a flexible architecture.

The decision making system

In bio-inspired systems, the

fact that it is the proper agent/robot who must decide its own

objectives it is assumed. Therefore, since this is our objective,

a decision making system based on drives, motivations, emotions, and selflearning is required.

The decision making system has a

bidirectional communication with the AD architecture. On one

side, the decision making system will select the behavior the

robot must execute according to its state. This behavior will

be taken by the AD architecture activating the corresponding

skill/s (deliberative or automatic one). On the other side, the

decision making system needs information in order to update

the internal and external state of the robot.

Drives and Motivations

The term homeostasis means maintaining a stable internal state.

This internal state can be configured by several variables,

which must be at an ideal level. When the value of these

variables differs from the ideal one, an error signal occurs:

the drive.

In our approach, the autonomous robot has certain needs

(drives) and motivations, and following the ideas of Hull

and Balkenius, the intensities of the motivations of

the robot are modeled as a function of its drives and some

external stimuli. For this purpose we used Lorentz’s hydraulic

model of motivation. In Lorenz’s model,

the internal drive strength interacts with the external stimulus

strength. If the drive is low, then a strong stimulus is needed

to trigger a motivated behavior. If the drive is high, then a

mild stimulus is sufficient. The general idea is that we

are motivated to eat when we are hungry and also when we

have food in front of us, although we do not really need it.

In our approach, once

the intensity of each motivation is calculated, they compete

among themselves for being the dominant one, and this one

determines the inner state of the robot.

In this decision making

system, there are no motivational behaviors. This means that

the robot does not necessary know in advance which behaviors

to select in order to satisfy the drive related to the dominant

motivation. There is a repertory of behaviors and they can

be executed depending on the relation of the robot with its

environment, i.e. the external state. For example, the robot will

be able to interact with people as long as it is accompanied

by someone.

Learning

The objective of this decision making system is having

the robot learn how to behave in order to maintain its needs

within an acceptable range. For this purpose, the learning process is made using a well-known reinforcement

learning algorithm, Q-learning, to learn from its bad and good experiences.

By using this algorithm,

the robot learns the value of every state-action pair through

its interaction with the environment. This means, it learns the

value that every action has in every possible state. The highest

value indicates that the correspondent action is the best one

to be selected in that state.

At the beginning of the learning

process these values, called the q-values, can all be set to

zero, or some of them can be fixed to another value. In the

first case, this implies that the robot will learn from scratch,

and in the second, that the robot has some kind of previous

information about the behavior selection. These initial values

will be updated during the learning process.

Emotions

Besides, happiness and sadness are

used in the learning process as the reinforcement function

and they are related to the wellbeing of the robot. The

wellbeing of the robot is defined as a function of its drives and

it measures the degree of satisfaction of its internal needs: as the values of the needs of the robot increase, its

wellbeing decreases.

In order to define happiness and sadness, we took the

definition of emotion given by Ortony into account. In

his opinion, emotions occur due to an appraised reaction

(positive or negative) to events. According to this point of

view, Ortony proposes that happiness occurs because

something good happens to the agent. On the contrary, sadness

appears when something bad happens. In our system, this

can be translated into the fact that happiness and sadness are

related to the positive and negative variations of the wellbeing

of the robot.

On the other hand, the role of happiness and sadness as the

reinforcement function was inspired by Gadanho’s works, but also by Rolls. He proposes

that emotions are states elicited by reinforcements (rewards

or punishments), so our actions are oriented to obtaining

rewards and avoiding punishments. Following this point of

view, in this proposed decision making system, happiness and

sadness are used as the positive and negative reinforcement

functions during the learning process, respectively. Moreover,

this approach seems consistent with the drive reduction theory

where the drive reduction is the chief mechanism of reward.