Our approach tries to involve general scenarios for the end-user, who is not an expert programming person. Therefore the task is not fixed a priori, but chosen by the end-user, her/himself. The challenge we want to face up to is to make all robot skills accessible to the end-user so he/she could have fun using and combining them in

new skills increasing robot capabilities in an easier way by natural interaction with the robot.

The robot can guide the user in that construction showing the possibilities in each interaction context and by precise queries about necessary information, maintaining

the coherence of the interaction process. Therefore, the implemented system doesn’t just allow the natural programming of a social robot, but also helps to improve the naturalness in Human-Robot Interaction.

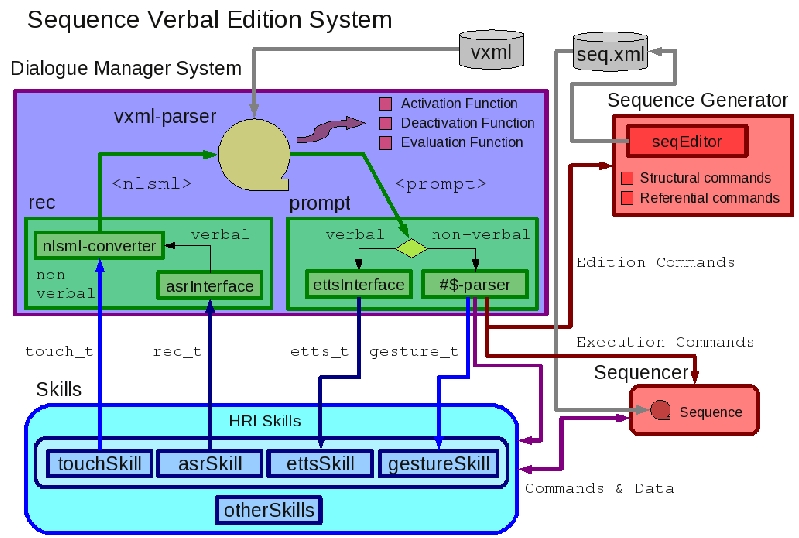

A Sequence Verbal Edition System (SVE) bridge between external utterances of the user and internal skills of the robot, making these skills accessible by voice. This system allows the user to build new skills (new sequences) from primitive skills, and reuse the created

skills for subsequent sequences.

Interactive Sequence Edition

Semantics and Verbal Commands for the Edition of

a Sequence

A sequence is essentially a graphical structure. The main goal of this work implies to make the sequence verbally accessible. Therefore we have studied how to design such a system that translates form verbal utterances to graphical information.

Referential Commands

For establishing the focus of attention for the sequence edition, the user can perform the following referential commands:

- Reference to a Node, that is in some part of the already created sequence. E. g. “After raising the left arm…”

- Reference to a Branch, that allows to distinguish between different branches inside the sequence. E.g. “In the first branch…”.

- Reference to a Structure of Multiples Branches This type of reference is used to conclude a part of the sequence made by some branches in parallel, that could be a conditional selection or a parallel execution. E. g. “You finish all the branches, and then, wait until I touch your left shoulder”.

If the user does not specify explicitly a focus of attention, the Sequence Generation System takes one by default. For instance, if the user adds an action, it is

supposed that the next structural command is going to be in reference to that action, then the SGS establish by default the focus of attention in that new added action.

Structural Commands

In the implemented system, end-users can perform the following structural commands:

- Addition of a node. The node could be a step (action) or a transition (condition). E. g.: The utterance “Raise the left arm” will add an action. The utterance “then…” or “Wait until I touch your head” will add a condition.

- Removing of a node. All nodes (steps or transitions) in the edition focus will be removed. E. g.: “Remove the last action.”

- Opening or closing a structure of multiple branches that can be a sequence selection or simultaneous sequences, explained above. E. g.: “You consider four possibilities” will open a selection between 4 branches; “You do three things,

at once” will open 3 simultaneous branches.

- Loops. That is, the union of one part of the sequence with a previous one. E. g.: “go back to the beginning”.

Notice that the addition of a node involves the definition of the content of such node, that is, if it is an action, its parameters, etc.

at once” will open 3 simultaneous branches.

The Natural Programming System presented here allows

multiple functionalities that can be easily implemented.

One open question of a programming system is

how to add new functions to the initial set of actions and

conditions without changing the design of the system,

that is, at execution time. In our approach, as semantic

grammar can be changed at run-time, it is possible to

add new terms, e.g. new action names, to the vocabulary

of the robot. A detection capability of the spelling

can also help in these sense. In the other side, as actions

and conditions are implemented as a xml-sequence with

Python functions, the new created function could be expressed

in these terms.

New skills are created from primitive ones. But each

new created skill can be reused for subsequent sequence

editions. However, the innate primitive set of actions/-

conditions plays an important role: this set has to cover

all the possibilities of the robot. Here we have used just

a small subset of the possibilities of Maggie: the low level

movements of the different DOF and touch sensors.

But also Maggie has many other skills that can be

also used in a new sequence. For instance, verbal skills

such as ettsSkill and asrSkill could be used as follows:

“when I say you ”stop“ you stop.”, or “If I touch you in

the side, you begin to laugh.” Many conditions can be

also included in the set of primitives. For instance, “wait

5 seconds”, “when the battery level is below 50%…”, “If

you detect an obstacle in the Laser…”, etc.

In other natural programming systems, the action

primitives are one by one designed from a set of cue

user utterances, therefore the primitives are well

adapted to the user speech. This method has the advantage

that easily covers a great range of the user utterances,

if the application domain is well constrained.

Nevertheless, this design method of the “innate set”

does not assure that the primitives are going to cover

all the action possibilities of the robot. In our approach

it was very important to cover all such possibilities,

and Maggie has many of them. Therefore, the innate

primitive set is closer to the low-level robot action-perception

space.

In the speech or symbolic level, the

semantic-CFG rules perform the necessary transformation

from the user utterance, that is natural, into the

action-perception robot space, that follows a formal

model. It is the DMS that is in charge of guiding the

user and translate his/her natural language to an internal

representation, in terms of these primitives.

Experimental tests with end-users show that they enjoy

a lot playing with Maggie to the game of “teaching

how to do things”. The robot still is not able to adapt

itself to the complexity of user’s communication rhythms, and is the user who has to

learn how and when articulate the utterances. Nevertheless,

we have found that end-users perceive this adaptation

intuitively and easily, and also that they enjoy doing

so. End-users see Maggie as a baby-agent that still is in

development, what produces a kind of sense of superiority.

The system easily strikes end-users in two ways.

In the interaction side, by the naturalness of the interaction.

In the execution side, as the robot performs what

the user has told.