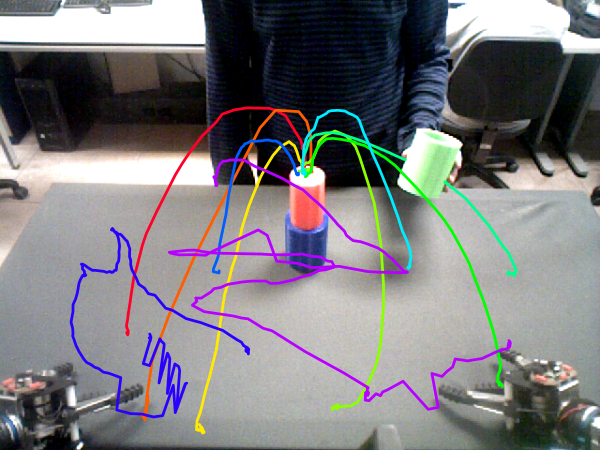

The search for the Grial of generalizing robot actions continues! In Continuous Goal-Directed Actions (CGDA), our robot imitation framework, an action is modelled as the changes it produces on the environment. First, record all the features you can off some user demonstrations. By features, we mean features! The robot joint q2 angle, a human hand Z coordinate, the percentage of a wall painted, the square of the room temperature plus ambient noise… Throw in a demonstration and feature selection algorithm, let it decide which demonstrations were consistent, and which features are relevant. You now have an action encoded as a CGDA model, which is essentially a multi-dimensional time series. As described in its first conference paper, recognition can be performed using costs such as those extracted by DTW, and execution can be achieved by evolutionary algorithms in a simulated environment. While we have performed some work in combining sequences of random movements, we are mostly content with the evolutionary strategies we later developed, such as IET. Additional references include the original S. Morante PhD thesis.